GSD

Alexa Skills Development with ButterCMS

Posted by Francesco Strazzullo on September 27, 2023

Alexa, Amazon’s digital assistant, is becoming more and more popular all over the world. Apart from the Amazon Echo series, a lot of devices ship with an out-of-the-box integration with Alexa. As a developer one of the most interesting aspects of this ecosystem is the opportunity to create a custom application for it. These applications are called Skills.

Why Alexa and ButterCMS?

Alexa, and other smart speakers, are a great way to serve text and audio content to your users. Furthermore, serving your content through Alexa voice service could be a way to make users interact with your content or services in a new and unexpected way.

A headless CMS is a good way to provide content for an Amazon Alexa Skill because you can serve the same content of a web service application for the Skill itself. In this way, your custom Alexa Skill can use existing content from day one.

ButterCMS is a perfect match for Alexa because it serves an out-of-the-box REST API client for text, audio and images, making the integration with an Alexa Skill on their developers portal very easy.

Getting Started

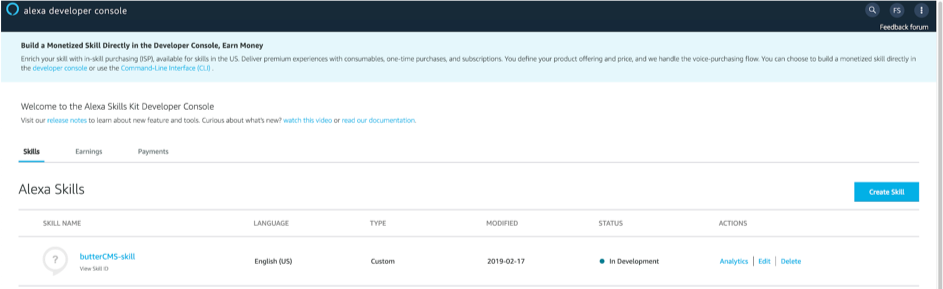

Before we start looking at the code, let’s see what tools we need to start developing our first Skill. The first thing that you need to do is create an Amazon account for the Alexa Developer Console. This web console lets you see all your skills and publish them to the Amazon Skills Store. But before publishing your Amazon Alexa Skill, you’ll need to pass a certification from Amazon Web Services (AWS), which is similar to a mobile application store.

On a technical side, an Alexa Skill is just a Lambda function. You can decide to host this Lambda directly on the console or on a dedicated Amazon Web Services (AWS) account (like I did for my examples). To build a Skill and deploy it, we'll need to install the ask-cli, a CLI tool for NodeJS created by Amazon. So let’s install it using the following command:

npm install -g ask-cliAfter the installation of the tool, you have to link your Alexa Developer Console account to it. To do that, run:

ask initAnd follow the instructions that show up on the terminal. After this first phase, you’re ready to create a new Skill project with the following command:

ask new [skill name]This command will create a new basic project in a directory called like the project itself. Now to test the newly created Skill, move your terminal to the newly created directory and type:

ask deployAfter that, you can test your Skill. Remember that the Alexa Skill is not locally testable, your code should run on the cloud. You can test your Skill with the Alexa App, a simulator or any Alexa enabled device linked with your Amazon account. I strongly suggest testing with a real device to understand better how the users will use the Skill.

Our first Alexa Skill

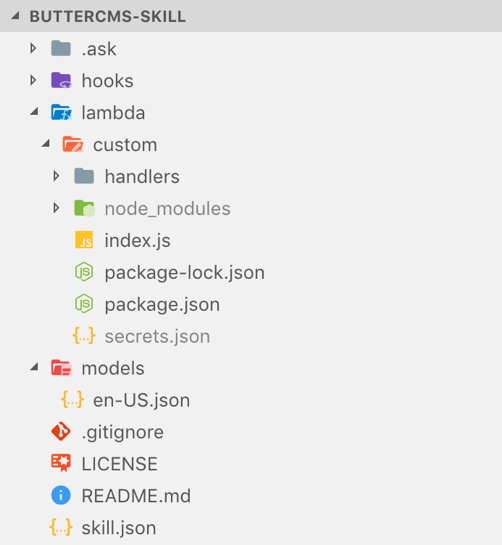

The basic structure of an Alexa Skill created with ask-cli tool is the following:

Every Alexa Skill consist of three main parts:

- The Skill Manifest in the

skill.jsonfile - The Interaction Models in the models directory

- The Lambda code in the lambda/custom directory

Let’s analyze in detail all the three elements of our Skill.

The Skill Manifest

The Manifest contains all the basic information of your Skill like name, description and topics. It also defines the countries where you want to publish the Skill. This is our basic Manifest.

{

"manifest": {

"publishingInformation": {

"locales": {

"en-US": {

"summary": "ButterCMS Example Skill",

"examplePhrases": [

"Alexa open butterCMS-skill"

],

"name": "butterCMS-skill",

"description": "ButterCMS Example Skill"

}

},

"isAvailableWorldwide": true,

"testingInstructions": "Sample Testing Instructions.",

"category": "KNOWLEDGE_AND_TRIVIA",

"distributionCountries": []

},

"apis": {

"custom": {

"endpoint": {

"sourceDir": "lambda/custom",

"uri": "ask-custom-butterCMS-skill-default"

}

}

},

"manifestVersion": "1.0"

}

}Notice that apart from basic information, we also tell the system where our lambda code is for this Skill.

The Interaction Model

The purpose of the first version of our Skill is just to read the latest post title from a ButterCMS instance. This is a conversation sample between a user and Alexa voice service.

User: “Alexa, open Butter CMS Example”

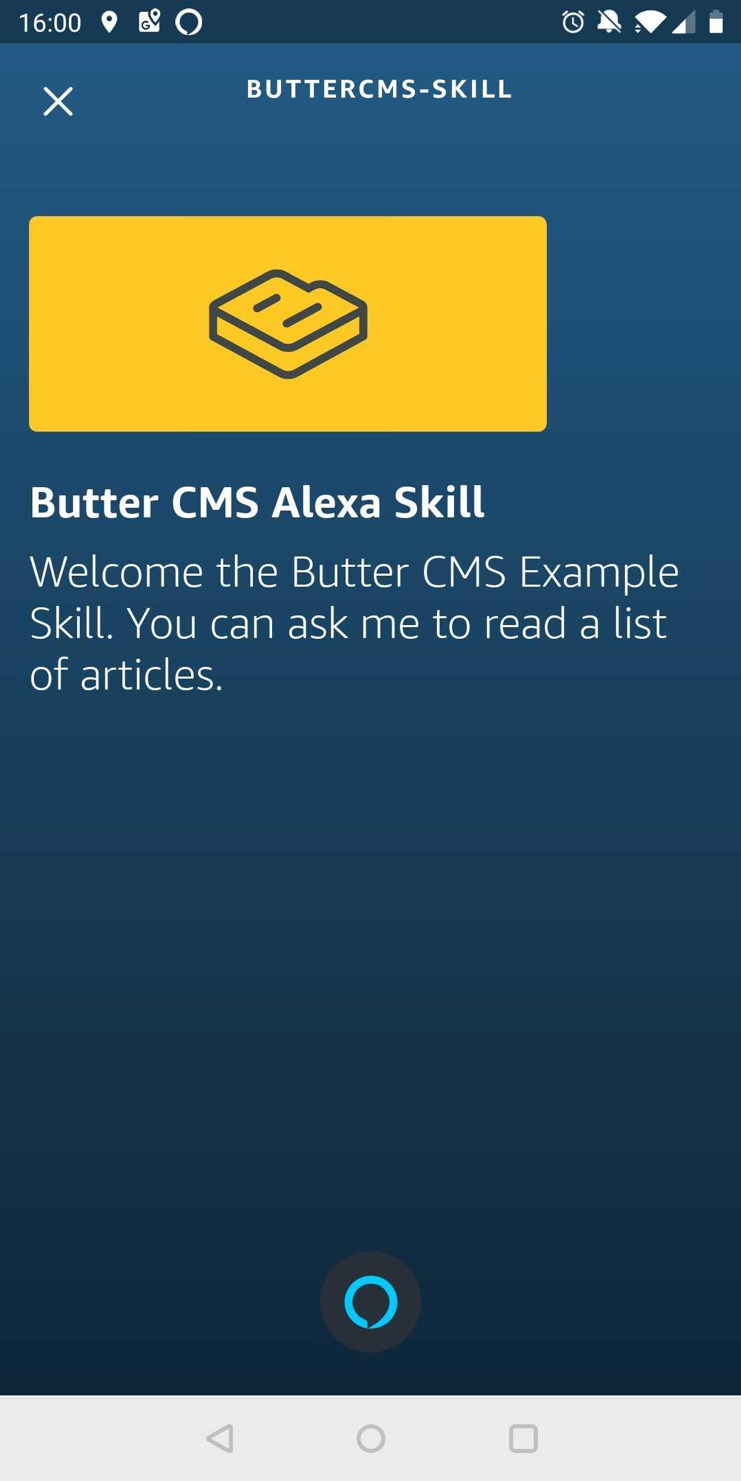

Alexa: “Welcome the Butter CMS Example Skill. You can ask me to read a list of articles.”

User: “Read the article list”

Alexa: “This is the list of the last two posts. [Post Title 1] [Post Title 2]”

The user can also cancel the invocation of the Skill without listening to the article list.

User: “Alexa, open Butter CMS Example”

Alexa: “Welcome the Butter CMS Example Skill. You can ask me to read a list of articles.”

User: “Stop”

Alexa: “Goodbye!”

It’s easy for us (as humans) to understand that the user wants Alexa to read the list of the last posts when they say “Read the article list” and to close the application when they say “Stop”, but how can we make this easy to understand for Alexa? That’s the purpose of the Interaction Model, it provides a list of possibilities for our users. The main concept of the Alexa Interaction Model (but also of most of the conversational frameworks like DialogFlow) is the Intent. An Intent is a way to represent a command activated by the user via the voice. In a nutshell, the Interaction Model is the set of all the Intent definitions for the voice user interface.

An Intent is composed by a type that indicates the kind of operation that the user wants to do. A set of samples for that Intent is a series of possible commands that the user can use to invoke that Intent. There is two kind of Intents, custom or built-in. Built-in Intents are mostly for short responses like “Yes”, “No”, “Stop” or “Cancel”; for these Intents the samples are optional. The Custom ones are for our domain model. This is the Interaction Model of our simple application.

{

"interactionModel": {

"languageModel": {

"invocationName": "butter cms example",

"intents": [

{

"name": "AMAZON.CancelIntent",

"samples": []

},

{

"name": "ArticleListIntent",

"samples": [

"read the article list"

]

}

]

}

}

}The Lambda Function

Let’s take a look now at the core of our Alexa Skill: the Lambda Function. For these examples, I decided to work with JavaScript, but you can also use other languages like Java or Python. The purpose of this Lambda is to answer to the Intents invoked by the user. I used the official Alexa Skills Kit SDK for Node.js by Amazon. This is the code of the entry point of our Lambda.

const Alexa = require('ask-sdk-core')

const LaunchRequestHandler = require('./handlers/launch')

const ErrorHandler = require('./handlers/error')

const ArticleListIntentHandler = require('./handlers/articleList')

const CancelAndStopIntentHandler = require('./handlers/cancel')

const skillBuilder = Alexa.SkillBuilders.custom()

exports.handler = skillBuilder

.addRequestHandlers(

LaunchRequestHandler,

ArticleListIntentHandler,

CancelAndStopIntentHandler

)

.addErrorHandlers(ErrorHandler)

.lambda()As you can see, in the abstraction of the Alexa Skills Kit SDK, the basic “building block” of an Alexa Skill is the handler. We have a handler for every Intent that we defined in our Interaction Model. In addition to these two, we also have a handler for the “Launch Request”, or in other words, the handler that is invoked when the user activates our custom Alexa Skill.

Let’s see the code of this specific handler.

const LaunchRequestHandler = {

canHandle (handlerInput) {

return handlerInput.requestEnvelope.request.type === 'LaunchRequest'

},

handle (handlerInput) {

const speechText = 'Welcome the Butter CMS Example Skill. You can ask me to read a list of articles.'

return handlerInput.responseBuilder

.speak(speechText)

.reprompt(speechText)

.withStandardCard(

'Butter CMS Alexa Skill',

speechText,

IMAGE_URL)

.getResponse()

}

}

module.exports = LaunchRequestHandlerA handler has two methods, canHandle and handle. For every user request the SDK loops every handler, for each of these the method canHandle. The first one that returns “true” is the current handler. The handle method of the current handler is invoked and its results are sent to the client. It’s very similar to the find method in JavaScript. In this specific case, this handler is the current one for a request of type 'LaunchRequest', AKA the first one after the activation of the Skill itself.

Let’s take a deeper look at the handle method in this first Handler. The speak method serves to make the speaker “talk”. When you need some kind of follow-up from the user, like in this case, it’s a good practice to also use the reprompt method. Using this method will make the smart speaker ask another command from the user, after 8 seconds of inactivity. If the user doesn’t give any kind of input after another other 8 seconds the Skill stops. The withStandardCard is useful to show some additional information for the speakers with a screen (like the Echo Spot or the mobile application)

This first handler is quite straightforward, it just replies with a constant text. Let’s see how to create a handler that reads the last posts from a ButterCMS account.

const secrets = require('../secrets.json')

const butter = require('buttercms')(secrets.BUTTER_CMS_API_KEY)

const ArticleListIntentHandler = {

canHandle (handlerInput) {

return handlerInput.requestEnvelope.request.type === 'IntentRequest' &&

handlerInput.requestEnvelope.request.intent.name === 'ArticleListIntent'

},

async handle (handlerInput) {

const response = await butter.post.list({ page: 1, page_size: 5 })

const titles = response.data.data.map(p => p.title)

const message = `<speak>

<p>This is the list of the last ${titles.length} posts.</p>

${titles.map(t => `<p>${t}</p>`)}

</speak>`

return handlerInput.responseBuilder

.speak(message)

.withSimpleCard(

'Artcile List',

titles.join('\n'))

.getResponse()

}

}

module.exports = ArticleListIntentHandlerIn this case, we’ve used the NodeJS ButterCMS client to fetch the last five posts. Then we get the titles to create a summary that Alexa voice service can use to speak to our users. Notice that the message is written in some sort of markup language. This language is called Speech Synthesis Markup Language (SSML), and it’s useful to create very dynamic text-to-speech integration. You can change the voice, the volume or the pitch. It’s a very complete language, and if you’re interested I would suggest you read the Amazon Alexa official reference. In this simple scenario, we just use a <p> tag to create a paragraph, with a strong break before and after the tag itself.

Streaming Audio Files

Another possibility that the Alexa Skills Kit SDK gives to developers is to stream audio files. We are going to modify our basic example, letting the user listen to the last episode of a podcast, using ButterCMS as a backend system.

The first thing to do is to add the AUDIO_PLAYER interfaces to our Skill Manifest. This will tell Amazon (and our users) that our custom Skill can play audio files.

{

"manifest": {

"publishingInformation": {

...

},

"apis": {

"custom": {

"endpoint": {

"sourceDir": "lambda/custom",

"uri": "ask-custom-butterCMS-skill-default"

},

"interfaces": [

{

"type": "AUDIO_PLAYER"

}

]

}

},

"manifestVersion": "1.0"

}

}In order to create an Audio Player Skill, we need also to support some built-in Intent like “Pause”, “Help” or “Resume”. We also have a new custom Intent called PodcastIntent to define the desire of the user to listen to the last podcast.

{

"interactionModel": {

"languageModel": {

"invocationName": "butter cms example",

"intents": [

...

{

"name": "PodcastIntent",

"samples": [

"I want to listen to the podcast"

]

},

{

"name": "AMAZON.HelpIntent",

"samples": []

},

{

"name": "AMAZON.PauseIntent",

"samples": []

},

{

"name": "AMAZON.ResumeIntent",

"samples": []

}

]

}

}

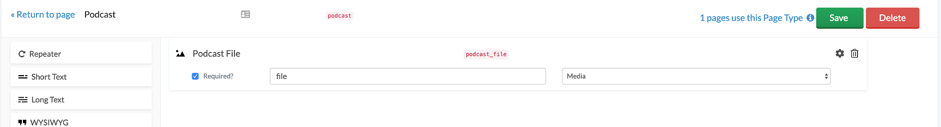

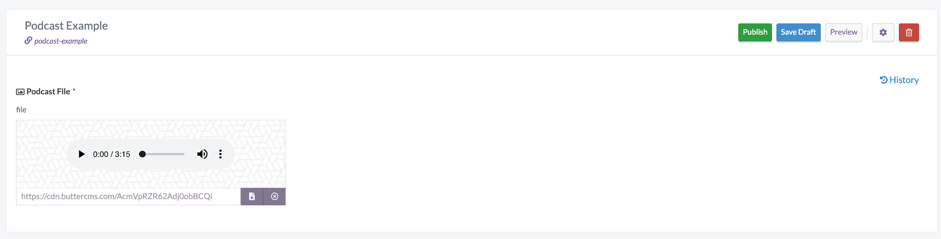

}Before analyzing the code for the handler of the PodcastIntent, let’s take a look at our ButterCMS configuration. I created a custom page type for the podcasts, with a field of type Media.

After that, we can create a new page for every podcast episode.

We can now use the ButterCMS SDK to fetch the last page of the podcast type and extract the filename.

const secrets = require('../secrets.json')

const butter = require('buttercms')(secrets.BUTTER_CMS_API_KEY)

const PodcastIntentHandler = {

canHandle (handlerInput) {

return handlerInput.requestEnvelope.request.type === 'IntentRequest' &&

(handlerInput.requestEnvelope.request.intent.name === 'PodcastIntent' ||

handlerInput.requestEnvelope.request.intent.name === 'AMAZON.ResumeIntent')

},

async handle (handlerInput) {

const response = await butter.page.list('podcast', { page: 1, page_size: 1 })

const file = response.data.data[0].fields.podcast_file

return handlerInput.responseBuilder

.addAudioPlayerPlayDirective('REPLACE_ALL', file, 'podcast')

.withShouldEndSession(true)

.getResponse()

}

}

module.exports = PodcastIntentHandlerTo play an audio file we need to use the addAudioPlayerPlayDirective method. The first parameter is the behavior that we want to give to the Alexa smart speaker. In this case, I’m telling it to replace the current stream with this one. Another possible value for this parameter is “ENQUEUE” that adds the new file to the playing queue. The second one is the actual file URL that we want to play. And the last one is a token, a unique name for this stream. In this scenario, we can just call it “podcast”.

The last thing that we need to do, is to stop the audio when the user wants to quit our Alexa Skill.

const CancelAndStopIntentHandler = {

canHandle (handlerInput) {

...

},

handle (handlerInput) {

const speechText = 'Goodbye!'

return handlerInput.responseBuilder

.speak(speechText)

.addAudioPlayerStopDirective()

.getResponse()

}

}

module.exports = CancelAndStopIntentHandlerConclusion

We have learned the basic concepts of Alexa Skills Development, and how to put a simple voice command Skill on the cloud to test it with a real device. We also covered how to integrate your ButterCMS contents with your custom Amazon Alexa Skill, letting your users access your contents via a voice user interface with a very low effort. If you’re curious you can take a look at the complete code for this simple project on GitHub.

Would you try to build your own custom Amazon Alexa Skill? Let me know in the comments below!

ButterCMS is the #1 rated Headless CMS

Related articles

Don’t miss a single post

Get our latest articles, stay updated!

Francesco is a developer and consultant @ flowing, author of “Frameworkless Front-end Development” for Apress, and speaker for many tech conferences and meetups. When Francesco isn't focused on developer work, he spends his time playing Playstation and cooking any strange, new ethnic food that piques his interest.